INTRODUCTION

K2’s Quality Assurance (QA) Processes are designed to be used by developers and testers involved in the actual delivery process. The content of this document is technical and this document can be regarded as a best practise for the QA side of the development process, as such this document encapsulates the ‘how’ of the QA discipline.

This document builds on the principles established in the K2 Quality Assurance Method document on the CoE site, here.

OVERRIDING PRINCIPLES

Note: This section details a bias towards quality. Always adhere to the points below. Any deviation requires a ‘change log’ decision entry by the Project Manager.

-

Always test early, test often.

-

Testing is done as part of the gated exit of a development cycle.

-

A given piece of development must be a delivery of a set of requirements, these requirements must include acceptance criteria which is used to determine if the requirement can be tested as complete.

GLOSSARY OF TERMS

| Term | Description | Example |

| Regression suite | A set of tests used to ensure that something that previously worked continues to work in the same manner. | Version 1.0 of the Claims system allows a user to have a claim with a single line item. This is a regression test that must pass for all later versions for backward compatibility. |

| Test surface area | Based on the impact area given by the development team. This is a description of the areas that need to be regressed as the result of a change made in the code base. | As the logic governing single versus multiple lines in the claim system has changed for this release, a sample of historical claims need to be tested to ensure that the new rules don’t break the historical reports. |

PROCESS FLOW

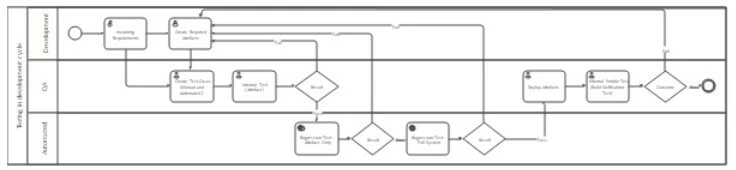

There’s a lifecycle associated with creating an artifact, see below:

This diagram shows the testing done after the development effort. The test effort may seem large, but it deals with all of the likely points where defects can be introduced:

-

Manual tests are documented in the form of Test Plans, Suites and Test Cases.

-

This provides concrete evidence of a working component in the current QA environment

-

This is intended for communication to stakeholders, i.e. the result of any outcome is a set of both passed and failed test cases

-

Automated tests support the manual tests and start to remove some of the manual regression requirements (which saves time when software will have multiple releases)

-

Full regression would be automated

FORMALITIES OF QA

QA is about gathering evidence, reporting the evidence to the team and ultimately to the stakeholders. Can an application ship with defects? Yes. This all depends on the risk tolerance of the given system. Medical systems need to be tested to much greater extents than business systems based on the potential for loss of life. Know that business users won’t react well to software with a high number of defects either. In the set of K2 delivery methodologies, TFS (either on premise or online) is used as a defect tracking tool (it is also use for project management, development, build and deployment, etc.)

Note: The naming examples given here are to be decided at a project level. Always consider the ability to query functionality post test execution. The question: “Was this item tested in the last run?” must be able to be answered by a simple query against the test documentation repository (TFS).

The following graph is an example of the current state of tests cases for a given test suite:

This would aid the stakeholder communication mentioned earlier, in this particular case knowing that 3 test cases were blocked and 2 failed would mean that the code is not yet at the correct level of quality for release to production.

TEST PLANS

Test Plan – End to End System

| Test Suite 1

| ||||

| Test Suite 2..

|

A test plan is the overall container for the test suites, which are in turn a container for test cases. A test case is the most granular level and the outcome is binary in that it either passes or it fails and has a defect associated with it. (Note: in the VS tooling the options are pass, fail, blocked and not applicable, the latter 2 are outside of aforementioned binary.) Often a test plans exist at numerous functional levels and all test plans sets should have a regression suite. Ideally the regression suite should be automated. Test plans will likely contain suites that cover requirements. Importantly, the overall test plan is the master set of test cases for a full end to end system test. The understanding of test surface area and correct categorization will help the testers understand what needs to be run for a given set of requirements. Therefore, the test plan should have all tests for a particular system version. The name of the test plan would usually reflect the business requirement that it is testing for within the system. For example:

-

HR System - Expense Claim

-

[System Name] – [Business Requirement]

TEST SUITES

The test suites are related to a specific requirement within a system. The test suite is intended to completely validate the requirement that it is named for. An example of this would be:

-

Functional - Expense Claim – Positive

-

[Test Purpose {Functional/Non Functional/…}] - [Requirement Area] - [Test Function {Positive/Negative/…}]

TEST CASES

A test case must be a binary result. It either passes, or fails and produces a defect. All examples below are generalized to the use of K2 SmartForms. Where concrete examples are given, the expense claim system example is used, if can be found here.

Note: not all controls exist in the given example.

Naming sample:

-

Validate AutoComplete Function

-

[Test Type {Validated/Security/…}] [Control Type / Control Name / Area / Specific Item Under Test]

-

Test cases usually cover a set of requirements per control / item / scenario:

-

Validate Blank Text Entry Fails

-

Validate MaxLength (200) Text Entry Succeeds

-

Etc.

| Control | Example Test Cases |

| AutoComplete |

|

| Button / Toolbar Button |

test] |

|

|

|

| Calendar |

test]

|

| Check Box / Choice |

a. Does validation change anything on the form that could prevent a user from proceeding (if required)? [functional test, negative test]

|

|

|

|

| Drop-Down List |

|

| File Upload |

|

| Hyperlink |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Listbox |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Lookup |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Multi-Select |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Picker |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Radio Button |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Rating |

In real world scenarios the goal is to see if there is validation and data tied to this control: a. It has a default, must this change? [functional test]

|

| Rich Text |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Slider |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Text Box |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| Tree |

In real world scenarios the goal is to see if there is validation and data tied to this control:

|

| View | Technically redundant as the above tests would not work if this were not working.

In real world scenarios the goal is to see if there are rules bound to the view:

|

| Form / Sub Form | Technically redundant as the above tests would not work if this were not working. |

|

| In real world scenarios the goal is to see if there are rules bound to the form:

|

| Popup (Confirmation) |

In real world scenarios the goal is to solicit user input:

|

| Popup (Modal) |

In real world scenarios the goal is to solicit user input:

|

| Validation | exploratory te | There are a number of items to consider during validation. Likely a white box test method should be employed at a first pass to ensure that the test coverage will cover the surface area. Note: the number of rules on Forms can be significant, sting is an alternative strategy and automation is recommended. Numbers a. Currency

| |

|

|

| b. | Decimal

|

|

|

| c. | Rounding i. Observe mathematical rules |

|

| | Text a. | Max length |

|

|

| b. | Wrap around |

|

|

| c. | Scrolling (horizontal / vertical) |

|

|

| d. | Data shown in label after input via textbox / text area |

|

|

| e. | Rich text format |

|

|

| f. | JS injection |

|

|

| g. | HTML injection |

|

| | Logic a. | Expressions i. Logic errors in boundaries |

|

|

| b. | Rules i. Ranges and boundaries |

|

|

| c. | Confirmation i. Correct action taken |

|

| | UX a. | If this checkbox is true, then show view X |

|

|

| b. | Navigation |

|

|

| c. | Save and continue |

|

|

| d. | Wizard |

|

|

| ||

| Etc. | There are a number of items not listed, expressions, parameters, themes, etc. These items need to be covered as part of test coverage, experience needs to dictate level of coverage and documentation. | ||

RUNNING TESTS

The tool used here is Microsoft Test Manager (MTM). A web version is available and has similar functionality, bar the recording capabilities.

To run the test configured above the first step should be to move them from the ‘Design’ status to the ‘Ready’ status. The general flow of this step is that a tester will create the test cases. These will be in ‘Design’ status and then post review with the test lead they get changed to ‘Ready’.

Note: The formality of review needs to be observed.

Using MTM select the ‘Test’ tab. ‘Run Tests’ is automatically selected. To run the tests simply select the Run option above the Test Plan, this will run all ‘Active’ tests.

Once ‘Run’ has been selected:

The option to ‘Create action recording’ has significant value and will aid with the creation of defects and logging of repro steps and screen shots. Alternatively, the tester can opt to just ‘Start Test’ and the testing begins. Each test can be marked as:

-

Pass

-

Fail

-

Blocked

-

Not Applicable

Each test step can either:

-

Pass

-

Fail

For any test that fails, a corresponding defect needs to be logged. The run results are automatically recorded and available to be reported on in this screen:

TESTING

Any code, in any delivery method that comes to the QA team, or the nominated tester on a given team must fundamentally work. This implies that it has been developer tested and developer reviewed. In addition, specifically in an offshore setup, the overall technical lead takes responsibility for the code base. As such the following reviews must take place:

CODE REVIEW (DEVELOPER)

K2 is generally deployed as a low code solution. This implies that there may not be many traditional developer artifacts to review. This does not preclude the deliverable from review. Mostly likely the below list will have reviews:

-

SmartForms and Views: Rules, Expressions & Parameters

-

SmartObjects and methods

-

LOB system interaction

-

Web Services

-

T-SQL

-

Brokers

Formal reviews are always conducted by the senior-most resources on a team, in small projects this may mean seeking a review outside of the immediate project team.

ARCHITECTURE REVIEW

Any system, bar the most simplistic, must have an associated architecture. This review is in part a type of structural testing, although white box test methods may need to be employed to determine architectural compliance. The architecture review requires a conceptual architecture document. Eventually this will look similar to (but is a different artifact from) the threat model. The main coverage points during this review are:

-

Logical system connections – a UI should never talk directly to a database for example.

-

Logical system separation – in SOA architectures, is there a message bus separating layers?

-

Authentication – under which user context will a given call be made?

-

Technology type – web service, database, etc.

-

Technology fit – using a database to capture audit trails may make more sense than a text file on a server (this is solution dependent).

-

Data across the wire protocol – SSL should be used, VPN IPSEC, etc.

ENVIRONMENT REVIEW

An environment review is a ratification of the virtual (or physical) systems configurations. This would include the actual servers running the components. It would include open ports, load balancers, web application proxies, IIS, SQL and windows servers. The purpose of the review is to check the layout of the physical system, and to ensure that questions around data redundancy, disaster recovery and high availability are answered. The main coverage points during this review are:

-

Are architectural components on logical servers? E.g. do not run IIS on a database server.

-

Are the tiers correctly delineated? Front end on an IIS server, LOB on a LOB server.

-

Are the components logically grouped from a networking perspective? DMZ’ed components should only be able to access the system via a specific gateway.

THREAT MODEL REVIEW

Given the security set of attack vectors that exist for applications a threat modeling tool is recommended. Microsoft Threat Modeling Tool 2016 (located here) is the current tool of choice. The main coverage points during this review are:

-

The flow of that data within a system

-

Integration points

-

Machine boundaries

-

Trust boundaries

WHITE BOX TESTING

White box testing is often employed on items that are difficult to determine from a manual test perspective. Often code exception paths are tested via this method. That said, there is value to doing a white box testing approach to the following K2 artifacts

-

SmartForms and Views:

-

Rules

-

Expressions

-

Parameters

-

Processes

-

Start rules

-

Destination rules

-

Escalation rules

-

Preceding rules

-

Succeeding rules

-

Line rules

-

SmartObjects

-

Composite

-

Chained methods

AUTOMATION

For any stable system or a stable part of a system under development, automation may make sense. Truly automated regression should be a goal of any modern system. The follow are areas of consideration and test types for automation:

-

SmartForms

-

Functional positive testing

-

Data validation

-

Negative testing

-

UX flow testing

-

Process

-

Path testing

-

SmartObject

-

Create, (functional test: read)

-

Update, (functional test: read)

-

Delete, (functional test: cannot read i.e. not found)

STANDARDS

STYLECOP

This is a CodePlex project (found here) that shows warnings for code that doesn’t comply to a defined standard. This is often used in large development teams to allow different developers to pick up another’s work as in Agile and Extreme Programming (XP) methodologies. The Visual Studio 2015 extension is available here. Once installed, StyleCop can be run by right clicking the project and selecting the ‘Run StyleCop’ below is sample output from StyleCop.

Note: it is much easier to start with StyleCop from the beginning than it is to retroactively apply it.

Note: Incoming source code (specifically C#) must be StyleCop compliant.

STATIC ANALYSIS

A good measure of quality can come from the adherence to standards that exist externally as best practices. Later versions of Visual Studio (tested on Visual Studio 2015) has static analysis built in. All VS projects must have static analysis run on it and it must pass this. This works for:

-

C#

-

Database Projects:

-

Outside of the VS IDE, there are tools for:

-

HTML see here

-

CSS see here

-

JavaScript see here

Some issues are not specifically relevant for given pieces of work. The strong name signing requirement for .DLL files may not be relevant for a broker, which would only ever be loaded by a K2 Server. The items can be justified inline in code, or removed from the standard rule set for the tools, but the warning is there for a reason and implies that some course of action needs to be taken.

Note: Incoming source code (either T-SQL or C#) must have no warnings after running static analysis.

STARTING QA

The process of QA doesn’t start at the time that a developer produces a piece of working code. It starts as early as possible. The artifact that the developer will work from, likely functional and technical requirements, is the exact same artifact that the tester takes to start determining test cases from. By the time the developer starts coding on a feature, that feature should have a corresponding set of test cases which become a standard to which the development team can hold themselves accountable. This implies that better tested code comes out of the development team and into the testing team. This improves overall system quality.

TEST THE SPECIFICATION

The incoming artifact needs to be validated just as much as the outgoing artifact. The incoming information needs to be read and disseminated by the tester. At this stage the tester is looking for logical inconsistencies, overall fit and finish into the existing software (where applicable). The tester needs to understand the requirements (and nuances thereof) in order to produce a set of test cases that provide evidence to satisfy the requirements in terms of the incoming specification. The tester needs to go further in that if a piece of code works functionally then does it work when given erroneous input and does it continue to work in the context of the broader system, i.e. integration testing?

For now, the types of things that should be checked during analysis of the specification are:

-

Logic issues

-

Flow issues

-

Inconsistencies in wireframe format

-

Incorrect assumptions

-

Missing validation

-

Missing state information

-

Missing persona information

-

Data type issues

-

Data conversion issues

-

Missing requirements

-

Etc. (This list is not intended to be finite.)

At the end of testing the requirements a set of test cases can be created that at will be validated against the developed component.

Note: As the role of QA is to articulate system quality up the chain to stakeholders, the set of test cases should always be as complete as possible regardless of the current level of development effort or chosen implementation methodology. The question: ‘can we ship this software?’ needs to be answered by having evidence to support making that decision.

TEST THE DEVELOPED CODE

At this point test cases exist by which to test the developed component. The component must be developer tested in the official development environment and not on a developer’s own machine. The component must be part of a build cycle and moved to a QA controlled environment via deployment done by a QA resource or, where possible, automated deployment. Once the code base is deployed (and configured where required), the tester can execute the existing set of test cases on the code.

BUILD VERIFICATION TEST (BVT)

For production systems, data usually cannot be entered into the system as reporting can suffer. In cases like this the BVT becomes the finite measure of if a deployment was successful. BVT’s can vary dependent on implementation but they can be thought of as a set of read only interactions that do not change the underlying data in the system and are therefore safe to use in a production environment.

SMOKE TEST

A smoke test should take a small amount of time. The window is dependent on the project and the complexity, but the concept is to validate that a given component is ready for an in-depth test run. Before starting with this process, the tester runs a smoke test on the deployed component doing simple things such as:

-

Check basic authentication

-

Check basic authorization

-

Does the component load?

-

Can the tester interact with the component?

-

Any obvious errors?

-

Any blocking issues?

If any of the above fail, then the tester needs to log a defect. If this is blocking, then the testing process stops.

FUNCTIONAL TEST

Once the code is deployed the question shifts to: does it satisfy the requirements? Part of the value add of QA is to either prove that code works, or that it doesn’t. Once that has been established that the basics function in the smoke test then the real in-depth testing begins i.e. the tester executes the planned test cases. There are a number of strategies that can be employed to determine if code is functioning as expected. Below is a list of items to check:

-

User interaction

-

Buttons

-

Navigation

-

Page flows

-

Debug info embedded on SmartForms or views must not be visible Process interaction

NON-FUNCTIONAL TEST

User Experience (UX) is a key factor in the usage of a system. UX has a significant amount of surface area and this type of testing can result in many subjective defects. It is important to set base lines with the team and to test in accordance with those baselines. Anything out of the established baseline is a defect. Things to keep in mind:

-

Time to first impression – how long the user must wait before they can start working with a Form?

-

Buttons – obvious and functional, any not required (like multiple buttons that do the same thing?)

-

Look and feel – does the application look ‘clean’?

-

Fit and finish – does the final product look well thought out and engineered?

-

Grammar and spelling mistakes – neither are acceptable.

-

Appropriate information on screen per persona – should finance only see finance info?

SECURITY TEST

General security testing needs to apply to any system under test:

-

Hardened platform

-

Information disclosure

-

system (e.g. which version of .Net)

-

errors (e.g. SQL exception containing the version of SQL Server)

-

personal information (e.g. stored credit card number – in this case always check why it needs to be stored, air on not storing it at all)

-

Minimum permission sets

-

Restricted admin accounts

-

2 factor authentication – where applicable

Formal penetrating testing by an external company may be a requirement dependent on the system. And there are tools that support automated penetration testing as a first step.

PERFORMANCE TEST (WEB)

Performance testing makes sense for all systems, for instance a web page has a certain window in which to render or a user may go to another site. This will be a stipulated window of time to first impression if background loading is required, but it’s a good general bar as users don’t like to wait for pages to render.

Using Visual Studio 2015, create a ‘Web Performance and Load Test Project’ (it’s under the ‘Test’ option for Visual C#:

In the ‘WebTest1.webtest’ (in the ‘Solution Explorer’) select the ‘Add Recording’ tool bar button and start the recording. This is the simplest way to start and we will use http://help.k2.com as the example.

Note: Replace http://help.k2.com with the URL for the Web Site under test.

Navigate to the system URL (in this case http://help.k2.com)

In this case the recorder also records the search text ‘Hello World!’.

Stop the recorder. And remove any superfluous URL’s:

Then simply run the test and performance metrics are shown:

The purpose of this test would be to look for outliers or errors. A first run on a newly developed website is unlikely to be as smooth, as the example. Things like 404 or 302 IIS error codes (or even

500’s) should be investigated and remedied along with poor performance. Once this test runs with acceptable results additional web tests can be added with the aim of later running load testing.

PERFORMANCE TEST (SMO)

Performance testing SmartObjects is a good way to determine throughput and round tripping to integration points. In the case of composite SmartObjects it can yield early warnings about multiple LOB systems and the effects of joining disparate data sources in the K2 Server. The preferred method of performance testing SmartObjects is Unit Testing. To do this:

Add a ‘Unit Test’ to the current performance test project and write the method implementation.

Running the unit test yields a piece of timing information. In this case it is 116ms. If a part of the system is performing badly, unit testing can help determine root causes. Generally, performance baselines for SMO’s using unit tests is a good idea.

PERFORMANCE TEST (PROCESS)

Note: K2 processes are asynchronous by design.

That said, running a saturation test (looking for memory leaks for example) on processes may be of value to a given system implementation. The K2 product team does extensive performance testing on all parts of the K2 platform, so testing performance on processes in a project context is likely redundant unless an issue is being tracked.

LOAD TEST

Once sets of either web performance tests or unit tests (or both) exist then Load Tests can be created as part of the strategy. To add a load test right click the project and select ‘Add…’ and ‘Load Test’:

In this case it’s going to be run on premise.

There are options on whether to run for a given amount of time, or for a number of iterations.

The scenario should be named and think times should be provided. The reason to use think times is because humans always have a pause between actions on web pages. This is a measure of realism and zero think times are not going to happen unless the system under test is a web service designed to be accessed via a system.

Use the load pattern that the system will create. In reality user loads fluctuate, but the constant load is useful if a specific response needs to be committed to for a given user load:

Modeling options are provided for the load test:

Currently there is only one test, so add that:

There are networks options, these are useful for anything that might need to be run over a WAN in the field and this could have throttled bandwidth:

Browser mixes are important.

Note: this only sets the incoming client type header, it doesn’t actually use any browsers.

Finally, performance counter sets on various computers are very useful for seeing things like servers running out of RAM:

Run the load test in the same manner of running the web performance test and the following results will help troubleshoot performance issues further:

As can be seen from the above, the average test time of 4.71 seconds is longer than the hypothetical 3 seconds that are aimed for in the target. There are a number of possible factors for this before looking to the design of the web page, for instance:

-

Network latency

-

Warm up time – JIT (Just in Time) compilation takes a performance penalty and warm ups are automatically discarded from the result set.

-

95% percentile. For any given web page or unit test it should be hit numerous times. As long as the artifact performs correctly 95% of the time, it is acceptable.

-

Averages. Run a number of loads tests using the exact same configuration (3 or more).

AUTOMATED TEST RUNS ON A BUILD IN TFS

There are a few steps to being able to run automated tests in a schedule or via a trigger. Conceptually these are run by a Test Agent, which is a piece of software that will do the actual run. These are reported to a Test Controller that will collate the information and store things like failure rates, performance counters, iterations, etc. The reason for the Agent and Controller split is for scalability, many agents can create additional test loads, generally at around 1,000 users per agent (see here). The below diagram illustrates this:

There is a cloud based version of this, for more info see here. Automated tests can also be linked to builds, the following considerations apply in this case:

If associated to an automated build this requires a Queue. (Controlled by a Build Agent, not to be confused with a Test Agent (above)).

-

This will require Build Admin permission – this is at the collection level, not the project level.

-

Queues and Agents permissions are also at the collection level.